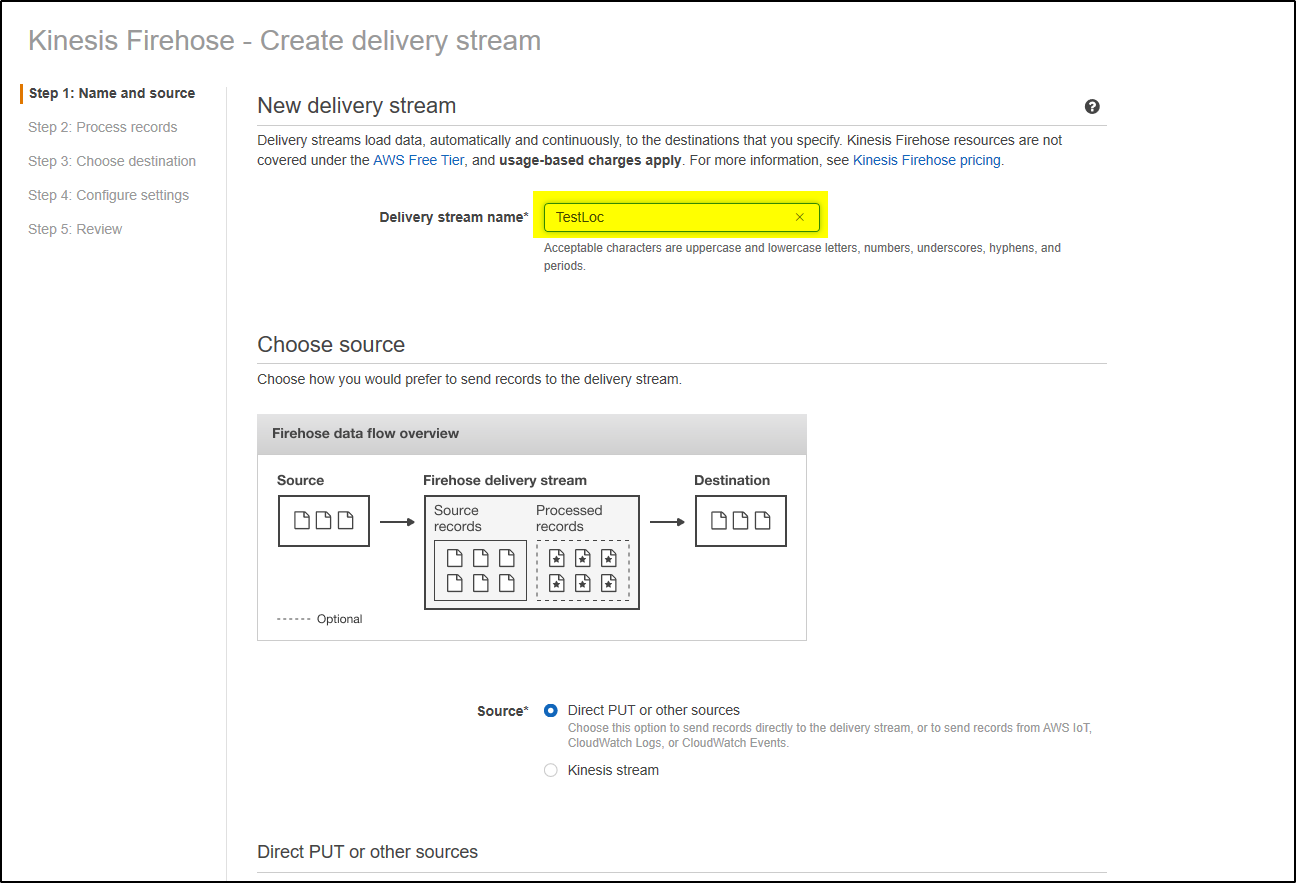

While creating Amazon Kinesis Firehose delivery stream to receive data from DynamoDB Stream (using lambda function), make sure that its name is exactly same as your DynamoDB table name. Final destination of the data for Kinesis Firehose delivery stream could be Amazon Simple Storage Service (Amazon S3), Amazon Elasticsearch Service, and Amazon Redshift. In this article we will set destination to S3 bucket. Make sure that S3 bucket is created where Kinesis Firehose delivery stream will save the output data.

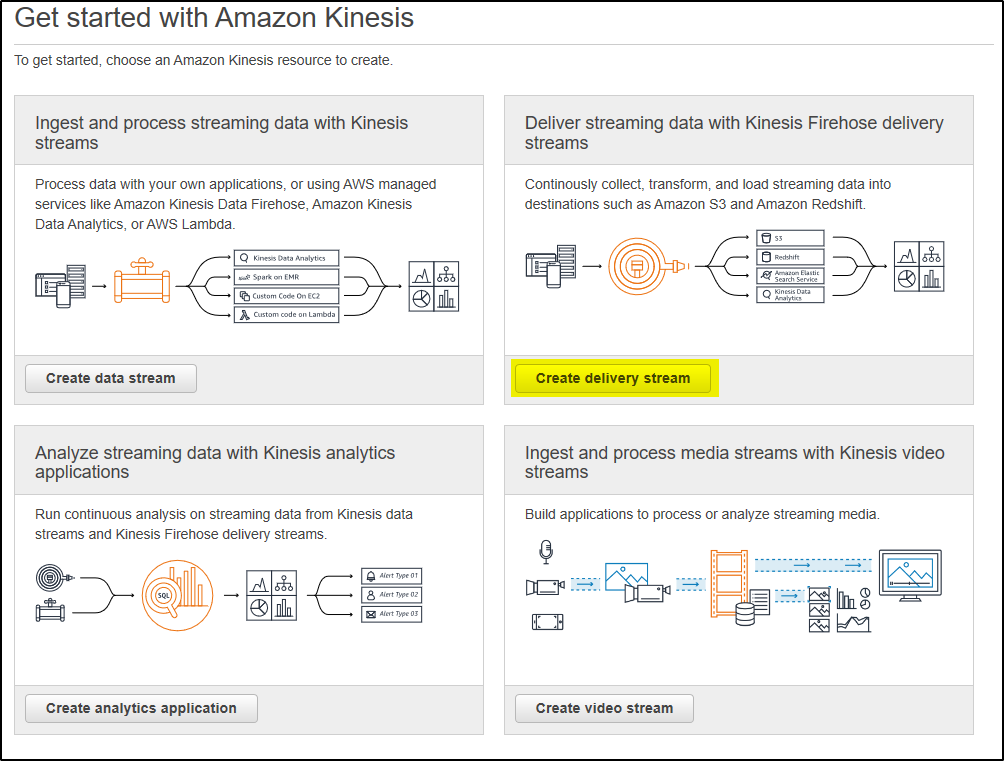

Step 1 – Sign in to the AWS Management Console https://console.aws.amazon.com/firehose/

Step 2 – Click on Create Delivery Stream.

Step 3 – Provide Delivery Stream Name and leave rest as default and click Next

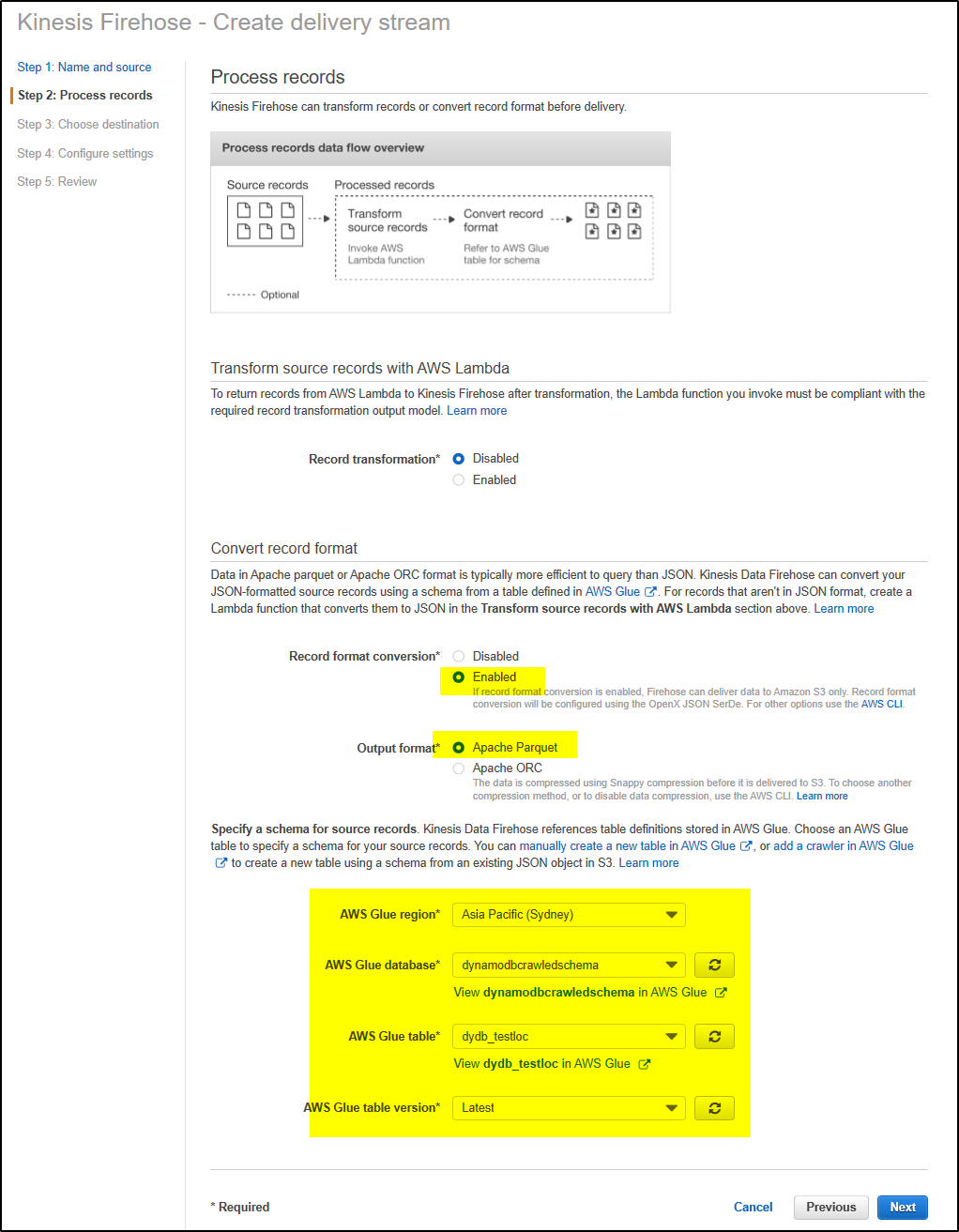

Step 4 -On Process records screen, Enable “Record format conversion” and select Apache Parquet “Output format“. Also provide “AWS Glue region“, “AWS Glue database“, “AWS Glue table” and “AWS Glue table version” details. Click Next

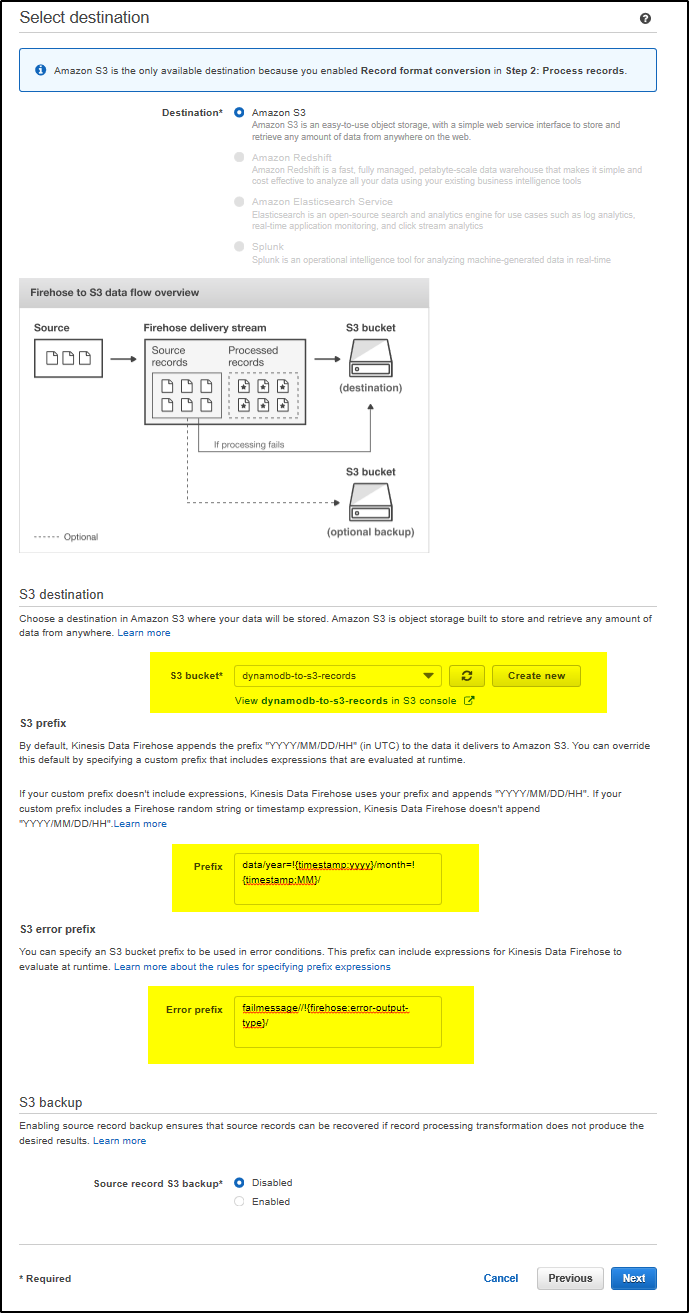

Step 5 – On “Select Destination” screen, provide “S3 bucket” name, “prefix” and “Error Prefix“. Click Next

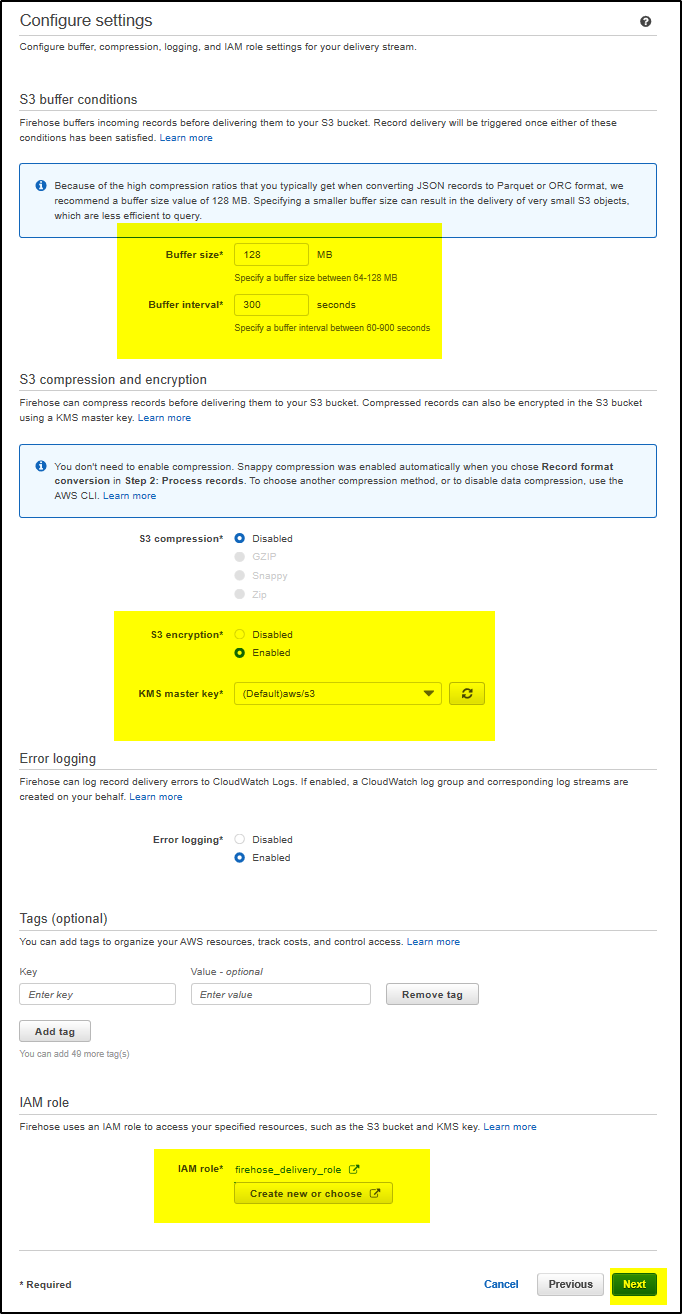

Step 6 – On “Configure settings” screen, provide “Buffer size” and “Buffer interval“. Enable “S3 encryption” and select default KMS master key. Also IAM role should be assigned to access S3 bucket and KMS key. click on IAM role “Create new or choose”. It will open IAM console. It will show the role name which will be created. Click “Allow“. Click Next.

Note: You can set both size-based and time-based limits on how much data the Firehose will buffer before writing to S3. There is a tradeoff between the number of requests per second to S3 and the delay for writing data to S3, depending on whether you favour few large objects or many small objects. Whichever condition is met first—time or size—triggers the writing of data to S3. For this example, the max buffer size of 128MB and max buffer interval of 900 seconds is set. You can compress or encrypt your data before writing to S3. You can also enable or disable error logging to CloudWatch Logs.

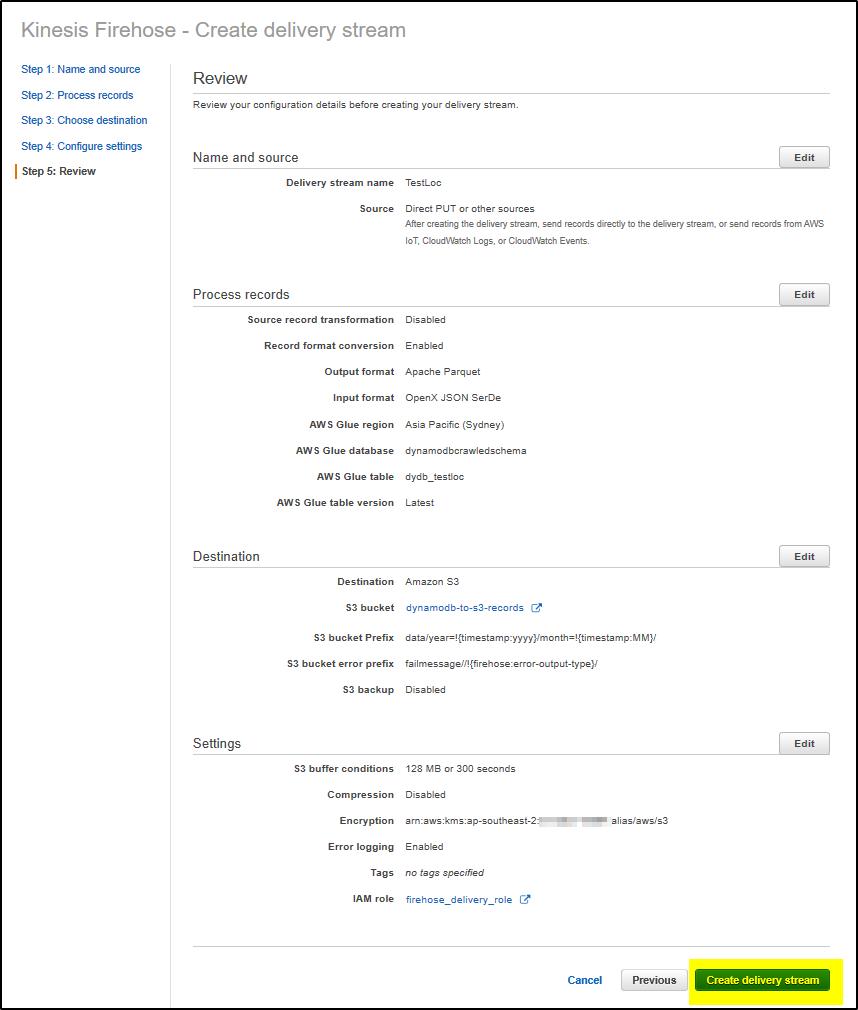

Step 7 – On Review screen, click Create Delivery Stream button.

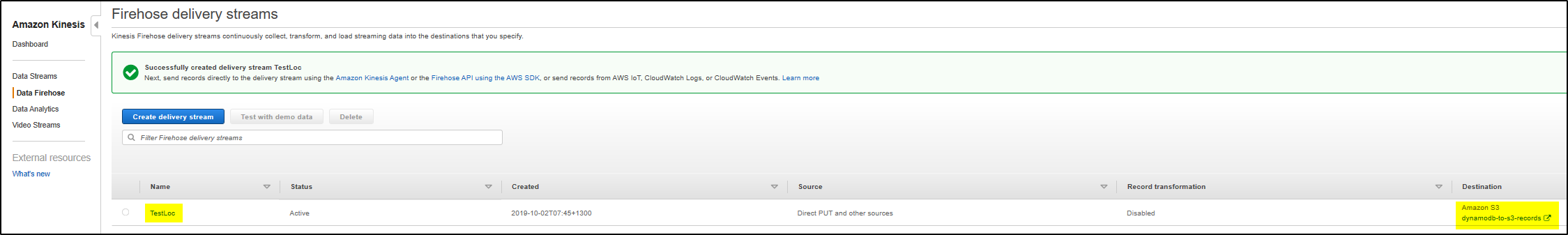

Step 8 – Now Kinesis Firehose delivery stream is ready to receive data from lambda function and save it in S3 bucket after applying record format as per Glue database/table schema format.