You can install a Kubernetes cluster on AWS using a tool called kops. kops provisions fully automated installation of cluster. It uses DNS to identify the cluster and everything runs using auto-scaling groups.

In this article we will see how kops cluster template can be used to create the k8s cluster as a DevOps process in AWS. This template will create cluster in private network topology. Bastion box will be accessible using load balancer.

Pre-requisites:

- Install kubectl

- Install kops

- Create AWS account, generate IAM user keys and configure using awscli.

- Install terraform

Steps:

- Create AWS resources. In this article terraform is used to create AWS resources like VPC, Route53 DNS, NAT gateway, Subnets (private and public), Internet Gateway, Route Tables, Elastic IPs. Download the terraform scripts from here. It has stack.tf which is main script to initiate the resource creation. The modules folder has scripts for network and services. Copy them to local folder. Following resources should be created before running the terraform script.

Pre-requisite AWS resources:

-> Create S3 bucket to save terraform state for AWS resources

-> Decide Route53 private Domain name [Note: do not create k8s.local as domain name]

-> Ensure you have IAM user access key and secret handy

-> Get AWS Account number

-> Decide Cluster Name and VPC CIDR range

Configure these values in variable.tf file found in Main folder.

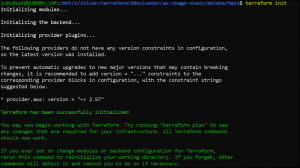

Now run terraform commands to create AWS resources-

Once AWS resources are created. The resource ids and endpoints details can be fed into kops cluster template. Download this template from here. There will be 2 files – cluster_template.yaml and master.yaml

Now run the following commands to replace the AWS resource values in this template so that cluster.yaml is created which will be used with kops command to create kubernetes cluster

TF_OUTPUT=$(terraform output -json)

CLUSTER_NAME="$(echo ${TF_OUTPUT} | jq -r .cluster_name.value)"

STATE="s3://$(echo ${TF_OUTPUT} | jq -r .kops_s3_bucket.value)"

Now create cluster.yaml file using below command. Make sure to provide –template value pointing to path where the downloaded yaml files are palced.

kops toolbox template --name ${CLUSTER_NAME} --values <(echo ${TF_OUTPUT}) --template tmp/ --output cluster.yaml

The cluster.yaml file will have values substituted.

Now using this cluster yaml we will create kubernetes cluster using kops command

kops replace -f cluster.yaml --force --state $STATE --name ${CLUSTER_NAME}

This will update the kops state of the cluster in the S3 bucket.

Now add your ssh key. This is required to later login to Bastion or Nodes using your own ssh private key.

kops create secret --name $CLUSTER_NAME --state ${STATE} sshpublickey admin -i ~/.ssh/id_rsa.pub

Now run the final kops command to create the cluster

kops update cluster --state ${STATE} --name ${CLUSTER_NAME} --yes

This will create cluster with 3 Master and 3 nodes-

To confirm that cluster is created, you have to login to bastion server. Then install kops and kubectl on bastion server using commands

KOPS_FLAVOR="kops-linux-amd64" KOPS_VERSION=$(curl -s https://api.github.com/repos/kubernetes/kops/releases/latest | grep tag_name | cut -d '"' -f 4) KOPS_URL="https://github.com/kubernetes/kops/releases/download/${KOPS_VERSION}/${KOPS_FLAVOR}" curl -sLO ${KOPS_URL} chmod +x ${KOPS_FLAVOR} sudo mv kops-linux-amd64 /usr/local/bin/kops KUBECTL_VERSION=$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt) KUBECTL_URL="https://storage.googleapis.com/kubernetes-release/release/${KUBECTL_VERSION}/bin/linux/amd64/kubectl" curl -sLO ${KUBECTL_URL} chmod +x kubectl sudo mv kubectl /usr/local/bin/kubectl CLUSTER_NAME=<set clustername> STATE=<S3 kops bucketname> [this demo creates this s3://test-kops-teststore]

Login to Bastion server and configure AWS cli and set STATE and CLUSTER_NAME. Then run this command to get cluster status-

kops export kubecfg --name ${CLUSTER_NAME} --state ${STATE} kops validate cluster --name ${CLUSTER_NAME} --state ${STATE}

This way the cluster.yaml file can be reused multiple times to recreate the cluster.

RESOURCES DEPLOYED using terraform and cluster.yaml

- one VPC: 3 private and 3 public subnets in 3 different Availability Zones

- three NAT gateways in each public subnet in each 3 Availability Zones,

- three self-healing Kubernetes Master instances in each Availability Zone’s private subnet, in AutoScaling groups (separate ASGs),

- three Node instances in one AutoScaling group, expended over all Availability Zones,

- one self-healing bastion host in 1 Availability Zone’s public subnet,

- three Elastic IP Addresses: 3 for NAT Gateways,

- one internal (or public: optional) ELB load balancer for HTTPS access to the Kubernetes API,

- two security groups: 1 for bastion host, 1 for Kubernetes Hosts (Master and Nodes),

- IAM roles for bastion hosts, K8s Nodes and Master hosts,

- one S3 bucket for kops state store,

- one Route53 private zone for VPC